Programming, in the context of modern computing, refers to the process of creating and writing instructions that computers can understand and execute to perform tasks. However, the history of programming dates back to long before modern digital computers existed. The evolution of programming is deeply intertwined with the development of mathematics, mechanical computation devices, and, eventually, digital computers. This fascinating journey has gone from early mathematical theories to today’s sophisticated high-level programming languages that power nearly every aspect of modern life.

Early Roots: The Foundations of Programming

The idea of giving instructions to a machine can be traced back centuries. One of the earliest devices that could be “programmed” was the Jacquard loom in the early 1800s. Invented by Joseph Marie Jacquard, this loom used punched cards to control the weaving of complex patterns in textiles. While not a computer in the modern sense, the Jacquard loom demonstrated how a machine could follow a sequence of operations based on external input (in this case, the punched cards). This principle would later become crucial in early computer programming.

Image: A Jacquard loom showing information punchcards, National Museum of Scotland

Image: A punch for Jacquard cards

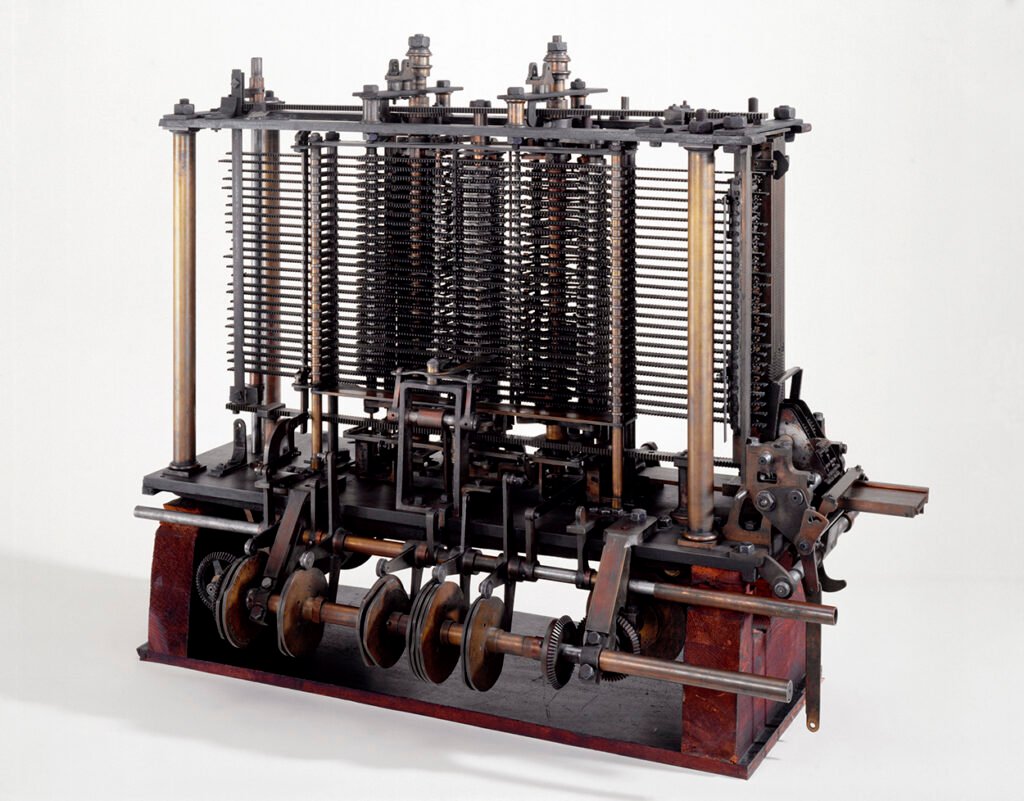

In the mid-19th century, Charles Babbage, an English mathematician and inventor, designed the Analytical Engine, which many consider the first mechanical computer. The machine was intended to be capable of performing any calculation through a series of instructions, which could be input via punched cards—a concept influenced by the Jacquard loom. Although Babbage’s machine was never fully built, it laid the theoretical foundation for future computing.

Ada Lovelace, a mathematician and close collaborator with Babbage, is often celebrated as the first computer programmer. She worked on creating algorithms that the Analytical Engine could theoretically execute, demonstrating that it could go beyond mere arithmetic to perform more general tasks. In her notes on Babbage’s work, Lovelace described how the engine could be used to compose music, create art, and solve complex problems. Her work in the 1840s is recognized as one of the earliest examples of algorithmic thinking.

The Birth of the Modern Computer

The first half of the 20th century saw the development of machines that were increasingly closer to modern computers, driven primarily by the needs of scientific research, cryptography, and military applications during World War II.

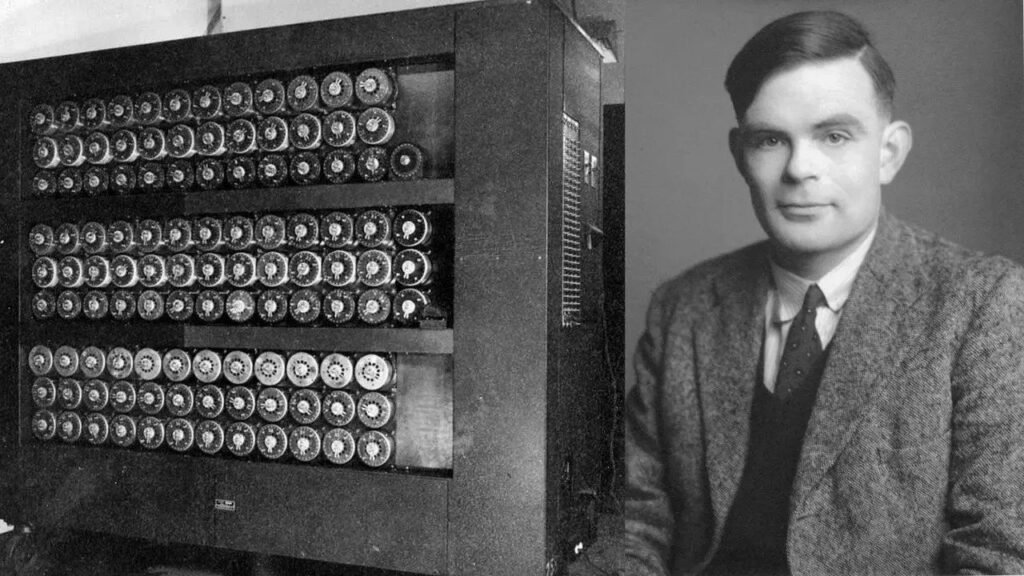

In the 1930s, Alan Turing, a British mathematician, introduced the concept of the Turing machine, a theoretical device that could simulate the logic of any computer algorithm. The Turing machine helped formalize the concept of computation and became a fundamental theoretical model for computer science. Turing’s ideas were pivotal in shaping the future of computing and programming.

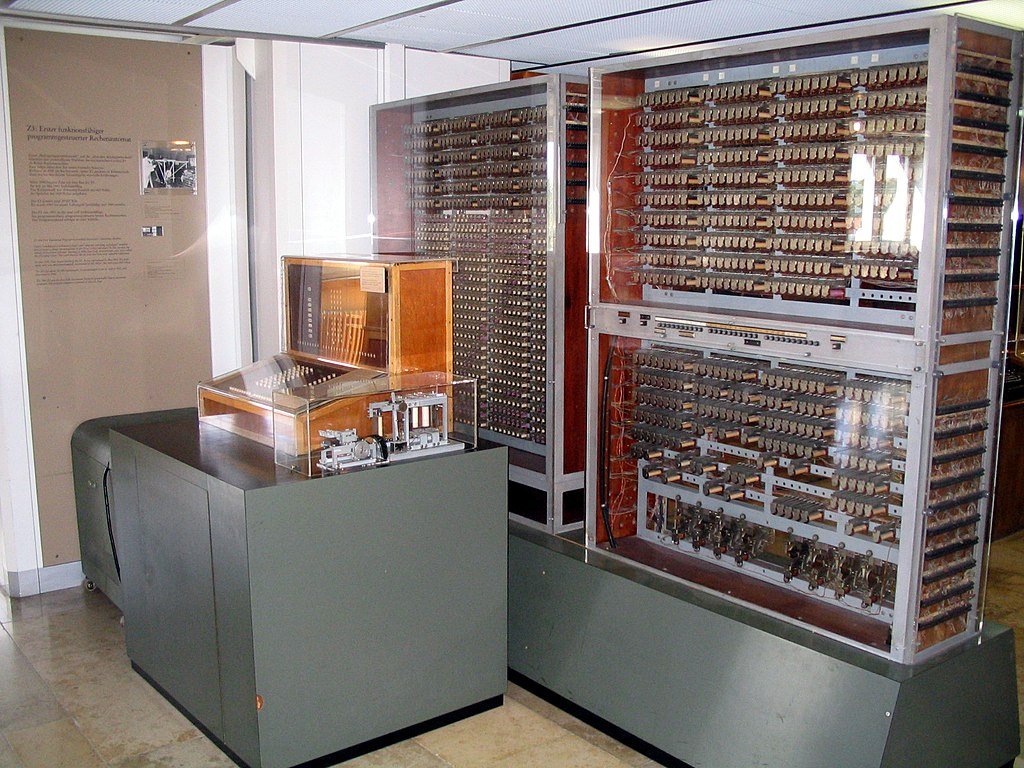

During World War II, Konrad Zuse, a German engineer, built the Z3, the world’s first programmable digital computer. Zuse’s invention used binary arithmetic and could be programmed via punched tape, marking a major leap forward in computational devices.

Image: Replica of the Zuse Z3 in the Deutsches Museum in Munich, Germany

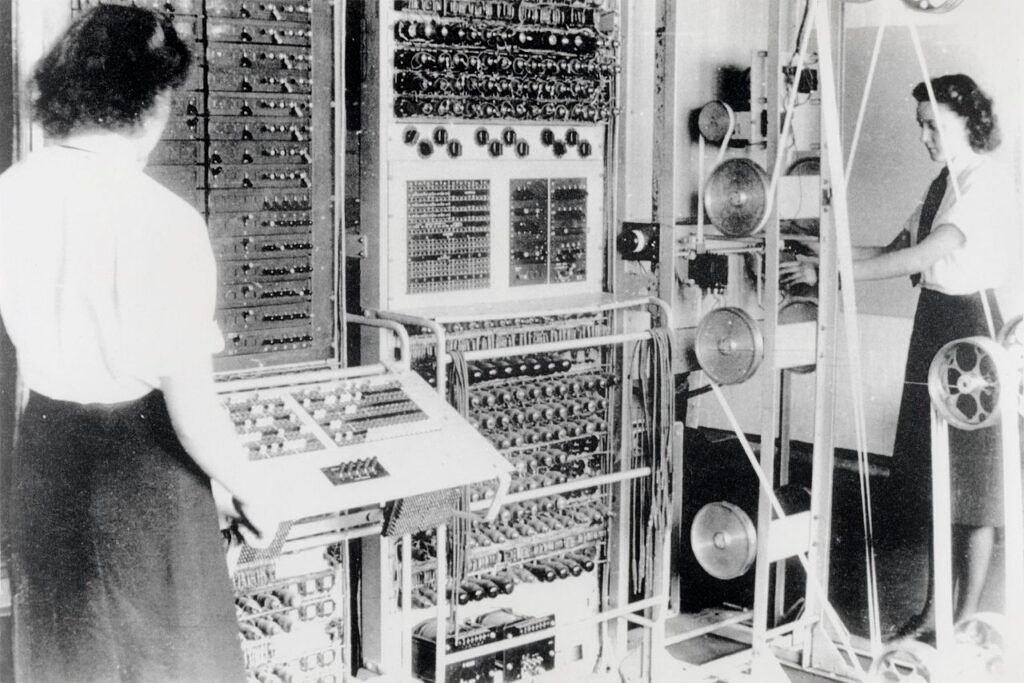

Meanwhile, the Colossus, a machine built in Britain to break German codes, was an early example of a programmable machine, albeit specialized for cryptography.

Image: A Colossus Mark 2 codebreaking computer being operated by Dorothy Du Boisson (left) and Elsie Booker (right), 1943

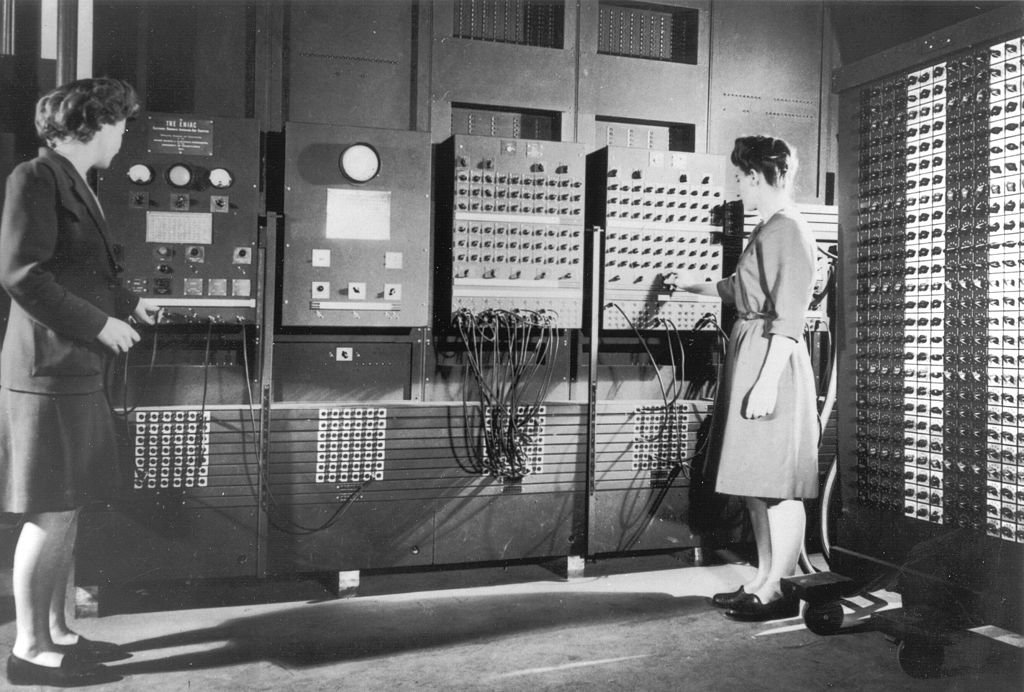

In the United States, the ENIAC (Electronic Numerical Integrator and Computer) was completed in 1945. Developed by John Presper Eckert and John Mauchly, ENIAC was one of the first general-purpose digital computers. It used decimal rather than binary and required manual rewiring and configuration to run different programs, but it could be reprogrammed to solve a variety of problems. This reconfigurable aspect of ENIAC hinted at the future of programmable computers.

The Emergence of Programming Languages

Early computers like ENIAC and the Z3 were programmed using machine language, which consisted of binary instructions directly executed by the computer’s hardware. Programming in machine language was tedious and error-prone, requiring programmers to work with long sequences of 0s and 1s. As computers became more powerful, there was a growing need for more accessible ways to program them.

Image: Programmers Betty Jean Jennings (left) and Fran Bilas (right) operating ENIAC’s main control panel at the Moore School of Electrical Engineering, c. 1945 (U.S. Army photo from the archives of the ARL Technical Library)

1. Assembly Language

The first step toward modern programming languages came with the development of assembly language in the late 1940s and early 1950s. Assembly language uses mnemonic codes to represent machine instructions, making it easier for programmers to write and understand code. Each mnemonic corresponds to a binary instruction, which the computer’s hardware can directly execute after being translated by an assembler.

Assembly language marked a significant improvement over machine code, but it was still highly hardware-dependent. Each type of computer had its own assembly language, meaning programs written for one machine wouldn’t work on another without significant rewriting.

2. High-Level Languages

In the 1950s, as computers became more widespread and complex, the need for more abstract programming languages that were easier to learn and use became apparent. The first high-level programming languages emerged during this period, laying the foundation for the languages we use today.

One of the earliest high-level languages was FORTRAN (FORmula TRANslation), developed in the mid-1950s by IBM for scientific and engineering applications. FORTRAN allowed programmers to write code that was much closer to human-readable algebraic notation, which the computer would then translate into machine code. This made programming more accessible and drastically reduced the time required to write complex programs.

Around the same time, COBOL (Common Business-Oriented Language) was developed, primarily for business applications. Unlike FORTRAN, which focused on scientific computation, COBOL was designed to handle large amounts of data processing and was intended to be readable by non-specialists. Its English-like syntax made it appealing for business professionals working with data.

Another key language that emerged in this era was LISP (LISt Processing), developed by John McCarthy in 1958. LISP was designed for artificial intelligence research and is still used today in AI and symbolic computation. It introduced the concept of recursion and dynamic memory allocation, both of which have had a lasting influence on programming.

3. Structured Programming and C

As programming languages continued to evolve through the 1960s and 1970s, the focus shifted towards improving program structure and reliability. One of the major developments was the introduction of structured programming, a paradigm that encourages the use of clear, understandable control structures (such as loops and conditionals) to improve code readability and maintainability.

The language C, developed by Dennis Ritchie at Bell Labs in the early 1970s, became one of the most influential languages in this era. C was designed for system programming and allowed programmers to write efficient code that could run on a variety of hardware platforms. Its simplicity, flexibility, and performance made it immensely popular, and it remains one of the most widely used languages today.

C also laid the groundwork for later languages like C++, which added object-oriented programming (OOP) features to C, and Objective-C, which became the primary language for developing applications on Apple platforms.

The Rise of Object-Oriented Programming (OOP)

In the 1980s, the programming world saw the rise of object-oriented programming (OOP), a paradigm that emphasizes the use of “objects”—self-contained units of code that contain both data and methods for manipulating that data. OOP encourages modularity, code reuse, and scalability, making it well-suited for large, complex software systems.

Smalltalk, developed in the 1970s, was one of the first pure OOP languages, but it wasn’t until the release of C++ in the 1980s that OOP gained widespread popularity. C++ extended the C language with classes and objects, giving programmers the ability to write more organized and maintainable code.

In the 1990s, OOP became the dominant paradigm with the rise of languages like Java, which was developed by Sun Microsystems and quickly became popular for web and enterprise development. Java’s “write once, run anywhere” philosophy, combined with its OOP features, made it a go-to language for building cross-platform applications.

The Internet Age and Modern Programming

The 1990s and early 2000s saw the explosive growth of the internet, which fundamentally changed the landscape of programming. Web development became a major focus for programmers, and new languages and frameworks emerged to meet the demand for building websites and online applications.

JavaScript, introduced in 1995 by Netscape, became the standard language for client-side web development. Together with HTML and CSS, JavaScript enabled developers to create dynamic, interactive web pages. On the server side, languages like PHP and Ruby became popular for building web applications.

As programming continued to evolve into the 21st century, high-level languages like Python, Ruby, and JavaScript became increasingly popular due to their simplicity, flexibility, and ease of use. These languages emphasized rapid development, readability, and a large ecosystem of libraries and frameworks, making them ideal for modern software development.

Conclusion

From its humble beginnings in mechanical looms and theoretical models, programming has evolved into a vast and complex discipline that underpins nearly every aspect of modern life. The journey from early punched cards to today’s sophisticated high-level languages reflects humanity’s growing understanding of computation and the increasing demands of our technological society.

Programming has moved from specialized, low-level machine instructions to accessible, high-level languages that allow developers to create complex systems with relative ease. Whether it’s building operating systems, designing websites, or developing artificial intelligence, programming continues to shape the world we live in. As technology evolves, the history of programming serves as a reminder of the ingenuity and perseverance that has driven the development of modern computing, and it will continue to play a central role in the future of innovation.